New measurement systems/equipment

Assessment of new equipment is a good way to ensure that it meets the organization’s needs. One of the great things about statistically assessing equipment is that the process will indicate whether different people can work effectively with the equipment. It also gives a performance baseline for the equipment, so if you experience deterioration in the equipment, the study will be able to quantify the problem.

Measurement systems/equipment being used for SPC

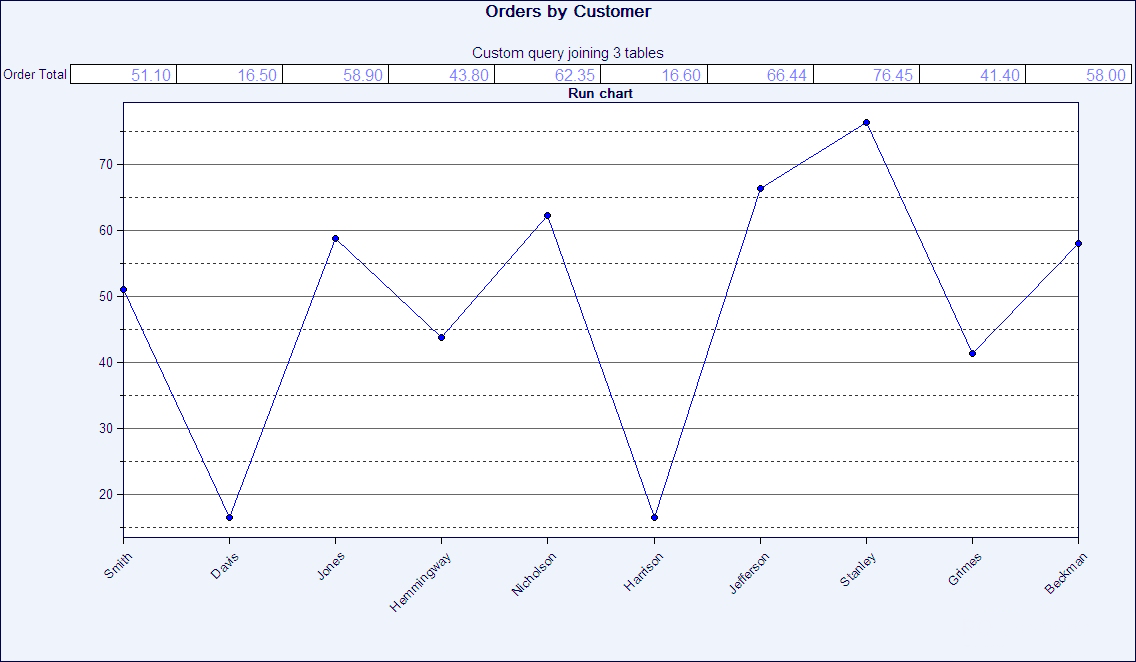

If the variation from the measurement system is high, then control charts will show changes in the measurement equipment, not changes in the process. So it is essential to assess measurement systems statistically prior to implementing SPC.

Since trends and changes apparent in SPC charts can come from the measurement system itself, it is important when trying to track down issues to understand the effect of measurement variation.

Measurement systems/equipment used at critical decision points

If a measurement is taken to assess whether to pass or fail a batch, it is essential that the measurement system is able to complete the task consistently and reliably.

A common comment from customers is: “We’ve been using this equipment for years and it has never been a problem. We regularly calibrate it and it has certificates. Why should we assess it?” Remember, a statistical assessment is an accurate picture of the everyday variation in measurements. Equipment can pass calibration easily and yet fail the statistical assessment. Often, measurement systems are viewed as being correct, beyond question. Don’t be blinded to this critical area of variation.

You can also use this kind of assessment in other circumstances, including:

- Ensuring that you and your customer use similar methods of measurement;

- Ensuring that you and your suppliers use similar methods of measurement;

- Ensuring that different locations within the organization measure in a similar way;

- Assessing a measurement system before and after repair;

- Preventing measurement deterioration;

- Ensuring that a a new tester is fully trained;

- Assessing two different methods of testing;

- Assessing the impact of changing environmental conditions.

In garnering these advantages from your measurement system analysis, you will find that GAGEpack provides tools that will assure that measurement studies are completed in a timely and accurate way.